When it comes to college rankings, UC Davis students appear to care a great deal more about how high or low their school is rated — and not much at all about the methodology involved or what anyone is actually trying to measure, according to a recent unscientific survey conducted by Dateline.

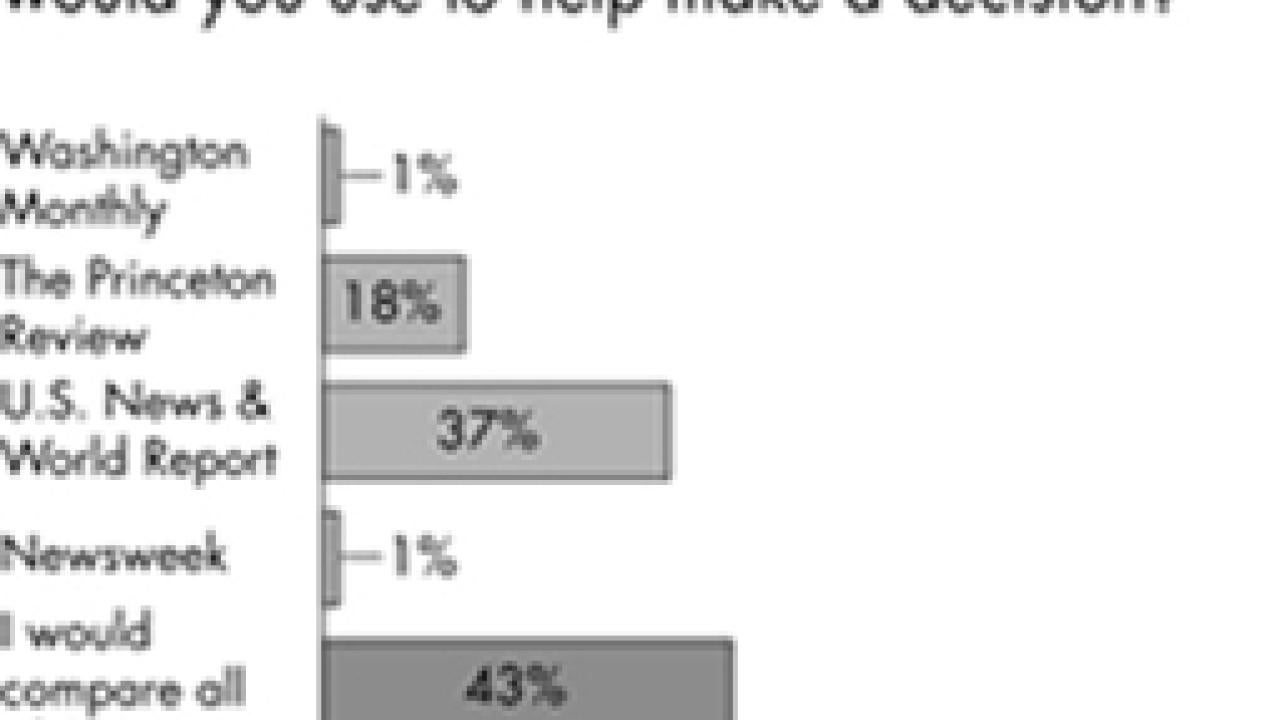

Dateline surveyed 60 students last month both in classrooms and those engaged in online university forums. Of the 51 who responded that rankings matter to them, more than half — 57 percent — said they do not check the methodology.

"I didn't care to check ranking methodology when applying to Davis," said Elaine Siegel, a first-year graduate student in education. "My parents gave me a better idea than the ranking guides when it came down to which UC to choose."

But Gary Sue Goodman, assistant director of the University Writing Program, thinks using rankings without knowing their methods is not pragmatic.

"I think that relying on these systems with very little sense of what the ranking is based on is absurd," she said. "It's giving people a false sense of 'I'm really finding out what's the best school' when in reality the best school for them might be chosen on entirely different criteria."

U.S. News & World Report's annual college rankings are the best-known and most controversial. The magazine ranks schools according to such criteria as the opinions of "top academics," selectivity and faculty salaries.

According to a Chronicle of Higher Education analysis of U.S. News data from the past 24 years, the publication's rankings "seem to overwhelmingly favor private institutions." Editor Brian Kelly defends the rankings, pointing out how widely used they are. The methodology "is what it is because we say it is. It's our best judgment of what is important," he said.

Washington Monthly thought it had a better idea. In 2005, the magazine launched a novel ranking system whose methodology looks at a university's and its students' commitment to serving America. Some of its approaches include counting the number of alumni currently serving in the Peace Corps and the percentage of federal work-study grants devoted to community service.

The rankings stand in contrast to the more popular rankings of The Princeton Review and U.S. News & World Report, both of which rank universities on more traditional yardsticks of academia, including student/faculty ratio, student scores on standardized tests and the rate of alumni giving.

For 2006, Washington Monthly ranks four UCs in the top 10, including UC Davis at number 10. Ivy League schools and others traditionally found on top of popular ranking systems have been scattered throughout Washington Monthly's: Yale ranks 12th, Harvard 28th and Princeton comes it at 43.

"It's not so much that we don't like (popular college guides); they serve a different goal," said Zachary Roth, an editor at Washington Monthly. "There's a place to tell parents where to go to school and what it's worth. What people also need is a guide to what people are doing to help the country."

The magazine's ranking system uses three criteria to generate a university's overall score. In its 2006 article "A Note on Methodology," Washington Monthly identifies these categories as community service, research and social mobility. Each category is weighted equally. Research-oriented universities are labeled national universities in the ranking guide and liberal arts universities are ranked separately.

Washington Monthly's community service score measures the percentage of students enrolled in the Army and Navy Reserve Officer Training Corps, and the percentage of alumni currently serving in the Peace Corps. The magazine also measures the percentage of federal work-study grants devoted to community service projects based on statistics from the Corporation for National and Community Service, which tracks how Americans contribute to serving their country.

The magazine's research score measures the total amount of an institution's research spending, counts the number of doctorates awarded in the sciences and engineering (excluding social sciences) and measures the percentage of alumni who have gone on to receive doctorates.

Why not count doctorates in the social sciences and arts?

"Because we're ultimately trying to rank a college on the level that it tries to encourage innovation in economic growth, which isn't really covered in the arts," said Roth.

The social mobility score counts both the number of students receiving Pell grants and the entire school's average SAT score. These numbers are used to predict a likely graduation rate for all students. Then, according to "A Note on Methodology," the score predicts "… the percentage of students on Pell grants based on SAT scores." It is not clear how this is done.

"We have the data on Pell grants and we also have the data on SATs. We use these to predict grad rates," said Roth. "But I'm told I can't give out how we do it other than to say we use a regression analysis."

Washington Monthly ranks each university with its own measures and then lists the U.S. News & World Report ranking in a right-hand column.

Unlike Washington Monthly, the criteria used in U.S. News & World Report are not all weighted equally. The publication states: "(We) gather data from each college for up to 15 indicators of academic excellence. Each factor is assigned a weight that reflects our judgment about how much a measure matters. Finally, the colleges in each category are ranked against their peers, based on their composite weighted score."

The three categories that receive the most weight are peer assessment, or the opinions of "top academics," retention, or how many students in the freshmen class return the following year, and faculty salary.

Goodman, of the University Writing Program, suggested that students and parents should find their own ways of choosing the best school.

"Instead of relying on rankings, people should judge schools based on what matters to them," she said. "If they're unsure how to judge them, they should analyze the ranking systems to see what they mean."

Saul Sugarman is a Dateline intern.

Media Resources

Clifton B. Parker, Dateline, (530) 752-1932, cparker@ucdavis.edu